How latency, bandwidth, and throughput impact Internet speed

Internet speed or how fast data transfers in a network is calculated using different metrics: latency, bandwidth, and throughput.

This article aims to explain these metrics and how the TCP protocol — the way most data is transmitted over the Internet nowadays — impacts them.

Sending off your data packets

Accessing the Internet is based on an exchange of information — when you shop online or stream a movie, your IP sends a flow of information in the form of data packets making a request. In turn, the receiver responds by sending back another flow of information, also in the form of data packets.

When using the TCP protocol, these data packets are transmitted in sequential order through a network of lanes to the destination — when they reach their destination, a confirmation is sent back to your IP. Only a limited number of packets can be sent without receiving confirmation that the prior packets have reached their destination.

At the same time, the amount of packets that can travel through the lane during a specific amount of time is also limited. The rate at which data packets can travel through the network is called bandwidth.

However, bandwidth isn’t the only element that impacts your real Internet speed. A number of factors, including the physical distance to the destination, as well as any accidents that may happen down the road can delay the arrival to destination, and, ultimately, the confirmation. The time it takes for your packets to reach their destination is called latency.

Congestion on the highway

When each packet travels through the network, it passes through multiple nodes where it gets redirected toward its destination. When your packet arrives at a node at the same time as multiple other packets, it gets queued.

Unfortunately, your packet could also drop or get lost at this stage and your IP won’t receive confirmation that it reached its destination. In this case, you will have to resend it, delaying the dispatch of the following packet.

These package losses alert the TCP protocol of delays in the transmission of data. To loosen up the traffic, the protocol automatically implements a mechanism (called the AIMD algorithm(new window)) that resends the lost packet at half the speed it would normally be sent.

This not only impacts the speed at which the lost packet is re-sent but the speed of the following packets as well — their speed incrementally increases only as traffic improves.

Ultimately, this leads to delays in all your packets reaching their destination — even in the case of minor packet loss —, and, of course, getting a response to your request. The final number of data packets that can be exchanged on a network during a specific amount of time is called throughput.

To calculate the maximum throughput on a TCP connection, you can use the Mathis formula(new window) and this online calculator(new window).

VPN Accelerator

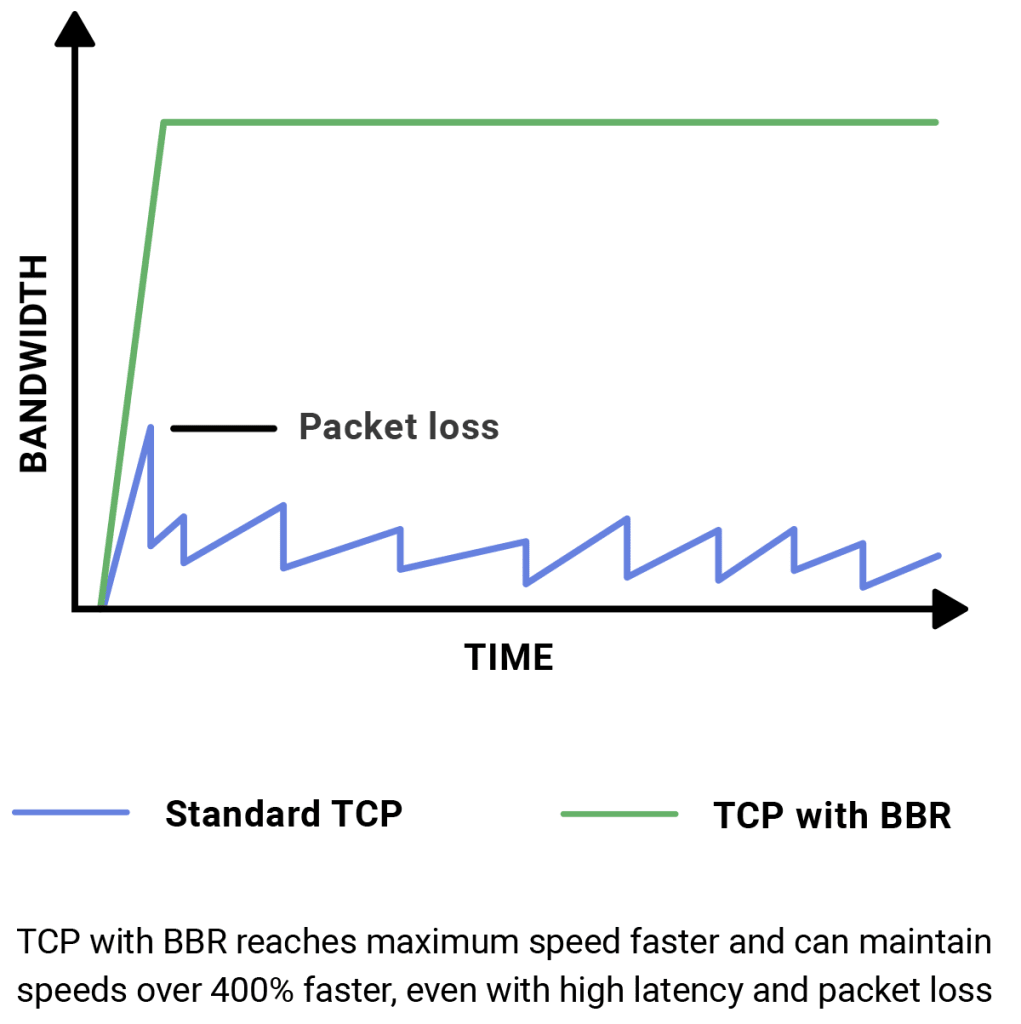

VPN Accelerator is unique to Proton VPN and comprises a set of technologies that can increase your VPN speeds by over 400% in certain situations. Thanks to this new technology, you can always enjoy the best possible VPN speeds when using our service.

One of the key technologies used by VPN Accelerator is a TCP delay-controlled TCP flow control algorithm called BBR. On longer paths or congested networks, BBR recovers faster from packet loss and also ramps up quicker (that is, it achieves maximum speed faster when a data transfer begins). VPN Accelerator is particularly effective at improving speeds over large distances.